Measure, Design, Try: On Building New Things

My passion is helping users make sense of data - at every stage from ingestion and processing, through analysis, and (especially) exploration and visualization. Often, that entails creating new ways to interact with the data – visualizations that bring out new insights, or query tools that make it easy to ask important questions.

I’ve been reflecting recently on my research process – both of the “user research” and “academic research” varieties. I’ve gotten to experience both: my career started out in academia during my PhD research; then at Microsoft Research (MSR), which operates as a sort of hybrid between academia and industry. I’ve worked since in industry settings. These settings have distinct constraints and objectives. Academic settings have an explicit goal of advancing the state of the art, usually through publishing research papers; in industry, we want to create a product which will benefit sales.

I would suggest, though, that there is more overlap then it may seem: while the business contexts are very different, the real goal is to improve user experience.

But how different are the academic and user research processes? I’ve been interested to realize that the project cycles look fairly similar. A full project is situated in a cycle:

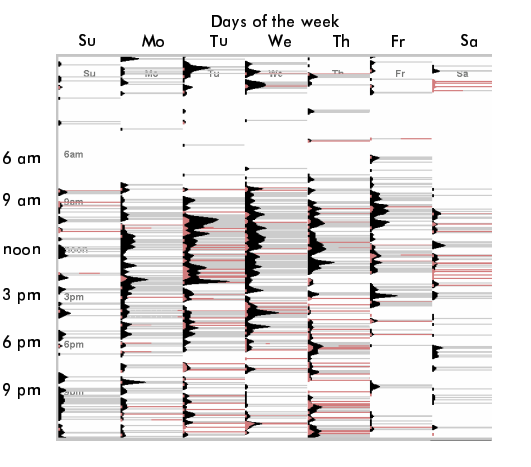

Measure what users are doing. Build a model of user needs and goals through qualitative and quantitative data analysis,

Design a solution to find approaches that speak to these needs,

Try solutions, building the lowest-fidelity prototypes to test them quickly

… and then

Measure whether the change worked, again with qualitative and quantitative techniques.

The Measure - Design - Try cycle

I was trained on this cycle – a typical academic design-study paper proposes a problem, proposes a design solution, prototypes it, and then measures whether it succeeded. A typical academic paper documents one round through the cycle, defending each step in terms of the literature.

In industry, the cycle looks similar. We’d start by trying to understand a user need, based on signals from sales, product management, or user observations. It starts as a mystery, following the footprints left in user’s data – “if users really are having trouble with this feature, then we should see a signal that manifests in their data this way”.

Optimally, we’d then be able to reach out to users who were running into the issue, and talk to them – one wonderful opportunity about working on a SaaS is that we were able to follow those users.

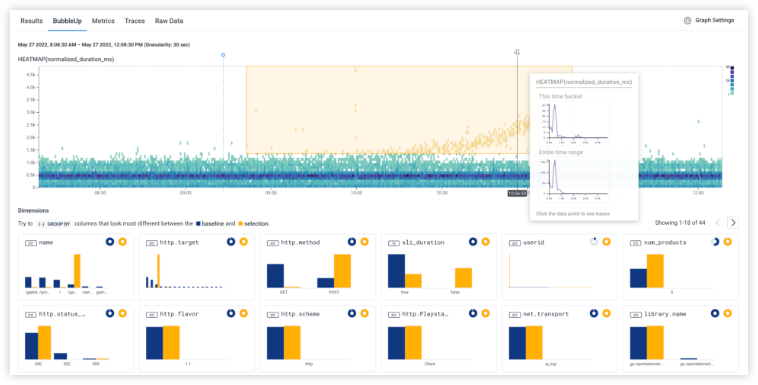

We could then identify the underlying user need. In the BubbleUp story, for example, I show that what we thought was a user need for query speed was actually a need for understanding data shapes.

A successful project builds just enough to test out the idea, gets it in front of users, and iterates. There are many forms of carrying out user research to test out a prototype – ranging from interviews, to lab experiments, to deploying placeholder versions behind a feature flag. The goal is to find signal as quickly as possible that an idea is working, or not, as the design change marches toward release (or is mercifully killed.)

Over time, the prototypes begin to look more like real code. Hopefully, the process of putting versions into user hands is beginning to show what work will have to be done to the real product.

While there are lots of difference between the academic and industry context, the commonalities far outweigh the differences. Some of the lessons that I’ve learned from both of these:

Choosing an appropriate problem is a blend of intuition, qualitative, and quantitative signals – and must be validated with data. It’s far too easy to pick the low-hanging, most obvious challenge.

Finding a solution in the design space is where domain expertise can be invaluable. My background in visualization often means that I can point to other solutions in the space, and can figure out how to adapt them. As the adage goes, “great artists steal” – a solution that someone else has used is likely to be both more reliable and more familiar.

It’s critical to protoype with ecologically valid data. It’s far too easy to show how good a design looks with idealized lorem-ipsums and well-behaved numbers – does that model the user data?

The best way to get that ecologically valid data is to iterate. Get feedback from users as rapidly as possible – and, if possible, reflecting their own data. Learn, iterate, tweak the prototype, and try again. (Even in academic papers, the section about “we built a prototype to test a hypothesis” usually hides a dozen rounds of iteration and redesign, as ideas that looked great on paper turn out to encounter subtleties when they become real.)

When you know you’re going to measure your prototype, you build in instrumentation from the beginning – state up front what you’ll want to measure, and then make sure you leave in hooks to read it back later.

Conclusion

This cycle of measure-design-try-measure is the core of every project I’ve built. Knowing that we’re working in this cycle drives the decision cycle – what questions do we need to solve at each iteration? how prototyped is enough? Reframing the discovery process through this lens helps guide the design process to create new, more exciting tools.